Security operations · 6 MIN READ · ANTHONY RANDAZZO AND BRUCE POTTER · DEC 1, 2020 · TAGS: Cloud security / MDR

It’s no secret that we’re fans of red team exercises here at Expel. What we love even more, though, is when we get to detect a red team attack in AWS Cloud.

Get ready to nerd out with us as we walk you through a really interesting red team exercise we recently spotted in a customer’s AWS cloud environment.

What is a red team engagement?

First things first: Red team assessments are a great way to understand your detection and investigative capabilities, and stress test your Incident Response (IR) plan. There are also penetration tests. These are a bit different. Here’s the best way to think about them, and understand the differences between the two:

Penetration test: “I need a pen test. This is the scope (boundaries). Tell me about all of the security holes within the scope of this assessment. In the meantime, I’ll be watching our detections.”

Red team: “This is your target. Try to get into my environment with no help and achieve that target, while also evading my defensive measures.”

At Expel, we find ourselves on the receiving end of a lot of penetration tests and red team engagements – in fact, we’ve even got our own playbook to help our customers plan them. (Yes, we regularly encourage our customers to put our Security Operations Center to the test.)

For the duration of this blog post, we’ll focus on what we’ve defined as a red team engagement.

Can you “red team” in AWS?

Before the cloud was a thing, red teams had a lot of similarities: The crafty “attackers” phished a user with a malicious document with a backdoor, grabbed some Microsoft credentials and pressed a big flashing “keys to the kingdom” button to achieve their objective. (Okay, there wasn’t really a big flashing button, but sometimes it felt that way.)

Fast forward to today: That model I just described is much different to execute in AWS.

Most access is managed and provisioned through a third party identity and access manager (IdM/IAM) like Okta or OneLogin. An attacker would have to identify some exposed AWS access keys elsewhere or compromise a multi-factor authenticated (MFA) user in an IdM such as Okta.

That’s exactly what one of our customers did recently when they brought in a red team to intrude into the customer’s AWS environment, causing our analysts to spring into action.

Let’s dive into what we uncovered during this red team exercise, what our team learned from it and how you can protect your own org from similar attacks.

How we discovered a crafty phishing attempt in AWS cloud

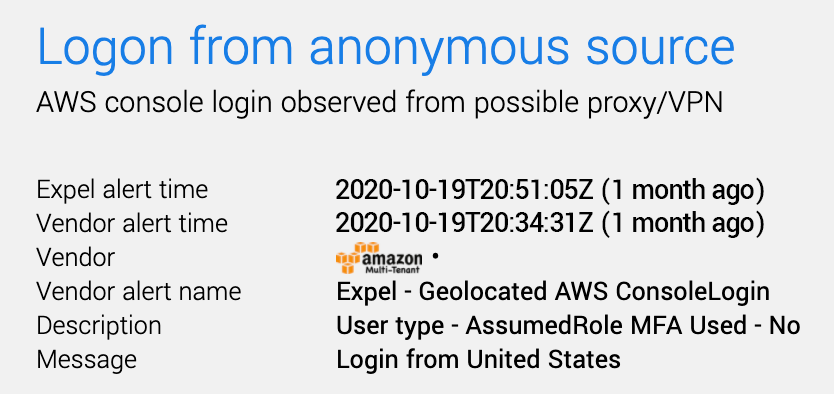

The first hint that something was wrong came in the form of one of our analysts discovering a login from an anonymous source to a customer’s AWS console. We immediately sprung into action, digging in to see if that user logged in from known proxies or end points previously.

Expel Workbench lead alert

But wait a second. Isn’t this customer’s AWS access provisioned through Okta? Why didn’t we get any Okta alerts?

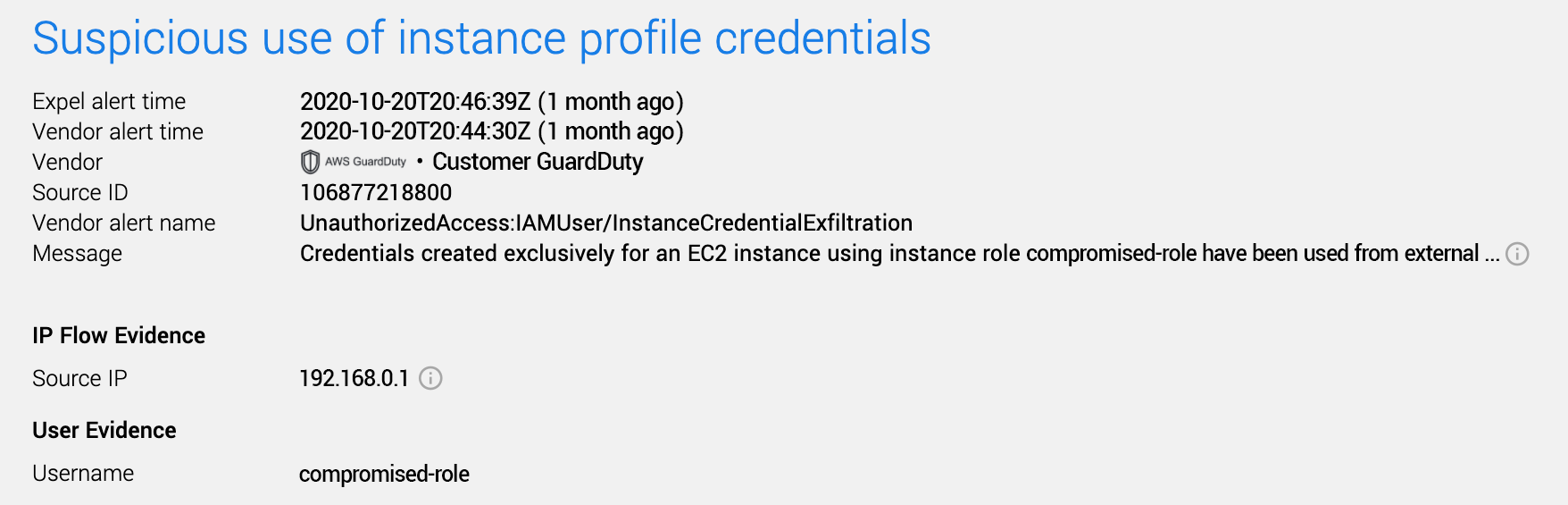

Shortly thereafter, an AmazonGuardDuty alert fired – those same access keys were being used from a python software development kit (SDK) rather than the AWS console and someone was enumerating those access key permissions.

The TL;DR on all of that? Not good.

We immediately escalated this as an incident to the customer to understand whether this series of events was expected.

Observing the end goal of the red team

That’s when we learned that we were just beginning a red team exercise, so we let the red team’s attack play out to see what else we’d uncover.

That’s when things got interesting.

First, we needed to understand how the red team got access to multiple AWS accounts when they were protected by Okta with MFA. Our theory was that these users were phished but we had to prove it.

We dove into the Okta log data to look for anomalies. Anything that indicated something fishy (pun intended). We correlated with the DUO MFA logs and that’s when we spotted some weirdness. It looked like the users’ session token may have been intercepted. All of these signs pointed to a crafty open source phishing kit like Evilginx.

Now that we knew how the red team got in; what were they after? After a few observed instance credential compromises and privilege escalations through role assumptions, it seemed they found what they were looking for. They made an AWS API call – StartSession – to AWS Systems Manager (the AWS equivalent to Windows SCCM).

Expel Workbench AWS GuardDuty alert

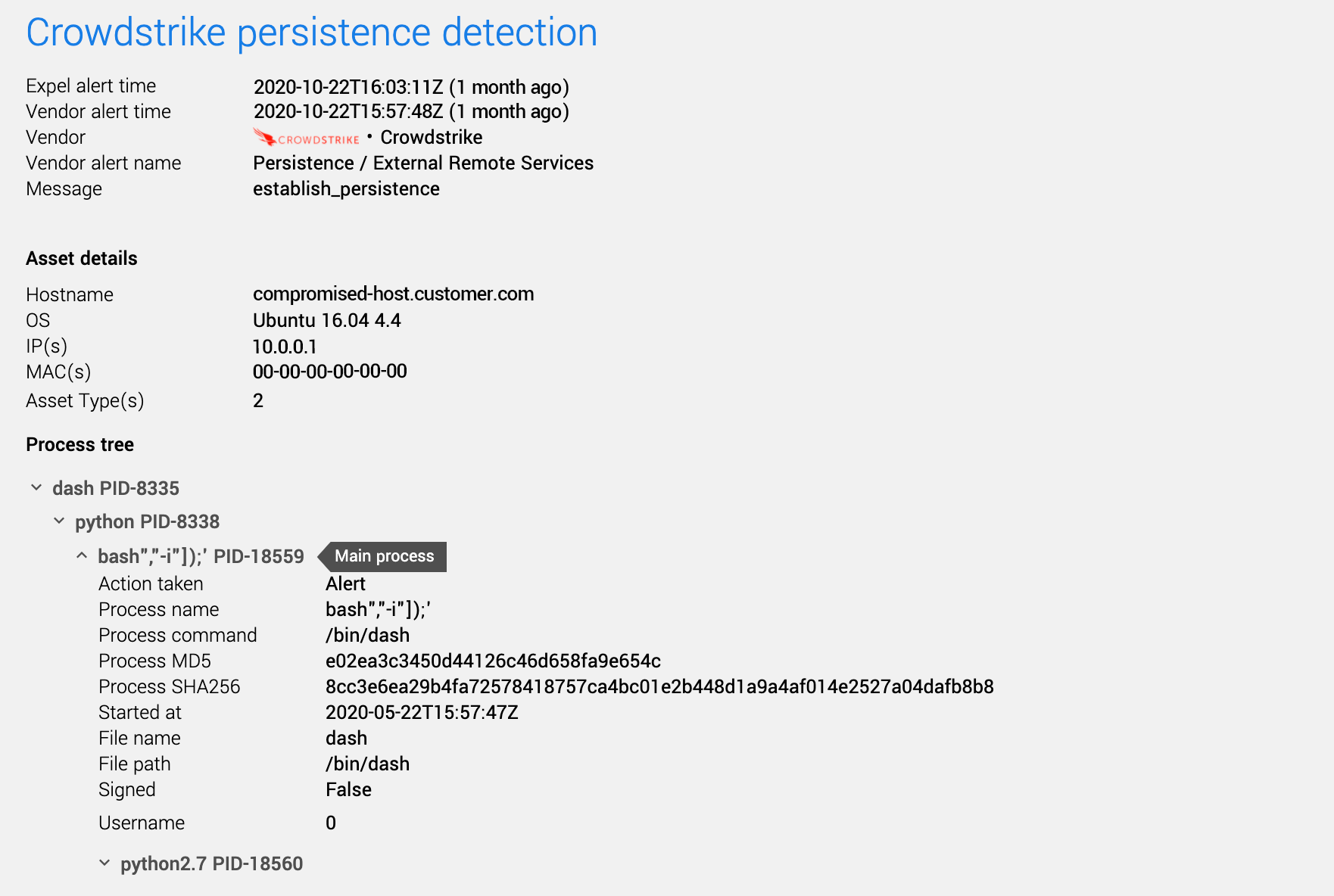

A few minutes later, we had a CrowdStrike Falcon EDR alert for a python backdoor. They now had sudo Linux access to that EC2 server.

Expel Workbench CrowdStrike alert

At this point, it was time to make the response jump to the CrowdStrike Falcon event data to see what they were up to. After perusing the file system, they found local credentials to an AWS Redshift database.

This was it. The crown jewels for that business: all of their customer data.

/usr/bin/perl -w /usr/bin/psql -h crownjewels.customer.com -U username -c d

If this had been a real attack, we would’ve immediately remediated the identified compromised Okta and AWS accounts in question.

But when it comes to red team exercises, we think they’re not only a great way to pressure test our SOC, but they also give us an opportunity to learn about potential ways to help a customer improve their security.

Where did we get our signal to detect this attack in AWS Cloud?

After a red team exercise is complete, we always go back and ask ourselves the following question: What helped us get visibility that we can alert against in the future?

In this case, the security signals that helped us detect and respond to the bad behavior in AWS Cloud were:

- Okta System logs: These logs contain all of the Okta IdM events to include the SSO activity to third party applications like AWS.

- DUO logs: MFA logs allowed us to spot that something was off as we correlated these with the Okta logs.

- Amazon CloudTrail: This helped us track ConsoleLogins and control plane activity, which is how we got our initial lead and tracked API activity from compromised AWS access keys.

- Amazon GuardDuty: GuardDuty was an essential tool that helped us look for and identify bad behavior in AWS.

- CrowdStrike: CrowdStrike helped us identify which specific cloud instances were compromised, and also helped us spot persistence. Endpoint visibility in cloud compute is often overlooked.

If we notice gaps in security signal during a red team exercise, we have a conversation with the customer to see how we can potentially help them implement more or different signals so that our team has full visibility into their environment.

How to protect your own org from these types of attacks

With a sophisticated red team exercise, you should have some lessons that come out of it – both for your team of analysts and for your customer.

Here are our takeaways from this exercise that you can use in your own org:

Protect both API access as well as your local compute resources in the cloud.

Use AWS controls like Service Control Policies to restrict API access across an AWS organization – this will help you manage who can do what in your environment and where they’re allowed to come from.

The cloud is a double-edged sword: APIs are great because they let cloud users experiment and scale easily (they’re everything everybody loves about the cloud). But API access also means that attackers can more easily do a lot of bad things at once if they make their way into your environment.Protect that access and your local compute resources by restricting access and using the tools available to you.

MFA isn’t your silver bullet anymore.

Spoiler alert: We’ve said before that MFA won’t protect you from attackers. While it’s still important to have, it’s not enough on its own. This particular attack underscores just how easy it is for an attacker to download a pretty sophisticated, automated attack from GitHub and then unleash it on your org in an effort to bypass MFA.

As automated phishing packages like this become more common, every org will need to find more ways to protect themselves from “man in the middle”-type attacks. Okta’s adaptive multi-factor authentication is a great preventative control to layer on that can help.

The industry needs better signal to detect compromised cloud users, particularly those as a result of MitM attacks… and detect it earlier in the attack sequence.

There’s plenty of data in the existing log data to use for behavioral detections. Microsoft does a pretty reasonable job at trying to spot these with their Azure Identity Protection service.

Want to know when we share more stories about stopping evil from lurking in AWS and thwarting sophisticated phishing attacks?

Subscribe to our EXE blog to get new posts sent directly to your email.